Okay, don’t freak out, however Meta is about to start out processing your personal DMs by way of its AI instruments.

Nicely, form of.

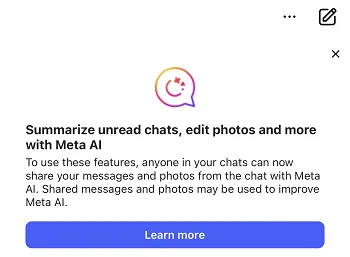

Over the past couple of days, Meta customers have reported seeing this new pop-up within the app which alerts them to its final chat AI options.

As you may learn for your self, Meta’s basically attempting to cowl its butt on knowledge privateness by letting you understand that, sure, now you can summon Meta AI to reply your questions and queries inside any of your chats throughout Fb, Instagram, Messenger and WhatsApp. However the price of doing so is that any data inside that chat may then be fed into Meta’s AI black field, and probably used for AI coaching.

“As a result of others in your chats can share your messages and pictures with Meta AI to make use of AI options, be aware earlier than sharing delicate data in chats that you don’t want AIs to make use of, resembling passwords, monetary data or different delicate data. We take steps to attempt to take away sure private identifiers out of your messages that others share with Meta AI previous to enhancing AI at Meta.”

I imply, the entire endeavor right here appears considerably flawed, as a result of the worth of getting Meta AI accessible inside your chats (i.e. if you happen to point out @MetaAI, you may ask a query in-stream) is unlikely to be important sufficient to have to take care of consciousness of every part that you simply share inside that chat, since you may alternatively simply have a separate Meta AI chat open, and use that for a similar goal.

However Meta’s eager to indicate off its AI instruments wherever it could actually. Which implies that it has to now warn you that if there may be something inside your DMs that you simply don’t need to be probably fed into its AI system, then probably spat out in another type, primarily based on one other customers’ queries, then mainly don’t put up it in your chats.

Or don’t use Meta AI inside your chats.

And earlier than you learn some put up someplace which says that you need to declare, in a Fb or IG put up, that you simply don’t give permission for such, I’ll prevent the effort and time: That’s 100% incorrect.

You’ve already granted Meta permission to make use of your data, inside that lengthy checklist of clauses that you simply skimmed over, earlier than tapping “I agree” whenever you signed as much as the app.

You’ll be able to’t decide out of such, the one method to keep away from Meta AI probably accessing your data is to:

- Not ask @MetaAI questions in your chats, which looks like the best resolution

- Delete your chat

- Delete or edit any messages inside a chat that you simply need to preserve out of its AI coaching set

- Cease utilizing Meta’s apps totally

Meta’s inside its rights to make use of your data on this approach, if it chooses, and by offering you with this pop-up, it’s letting you understand precisely the way it may achieve this, if someone in your chat makes use of Meta AI.

Is {that a} large overstep of person privateness? Nicely, no, but it surely additionally will depend on how you utilize your DMs, and what you may need to preserve personal. I imply, the probabilities of an AI mannequin re-creating your private data just isn’t very excessive, however Meta is warning you that this might occur if you happen to ask Meta AI into your chats.

So once more, if unsure, don’t use Meta AI in your chats. You’ll be able to at all times ask Meta AI your query in a separate chat window if you happen to want.

You’ll be able to learn extra about Meta AI and its phrases of service right here.